Why Synthetic Cognition Breaks the Dependency Trap and Creates Intelligence Built for the Next 20 Years, Not the Last 20 Months. Most AI systems today are quietly fragile. They appear powerful, stable, and impressive. Under the surface, almost every AI product in the world depends on one thing:

A single large language model.

If that model changes, the system changes.

If that model drifts, the system drifts.

If that model shuts down, the entire product collapses.

This isn’t a technical inconvenience. It’s a structural risk. The world built on AI is being built on shifting sand. Synthetic Cognition takes a different path, one designed from the beginning to survive change, not be threatened by it.

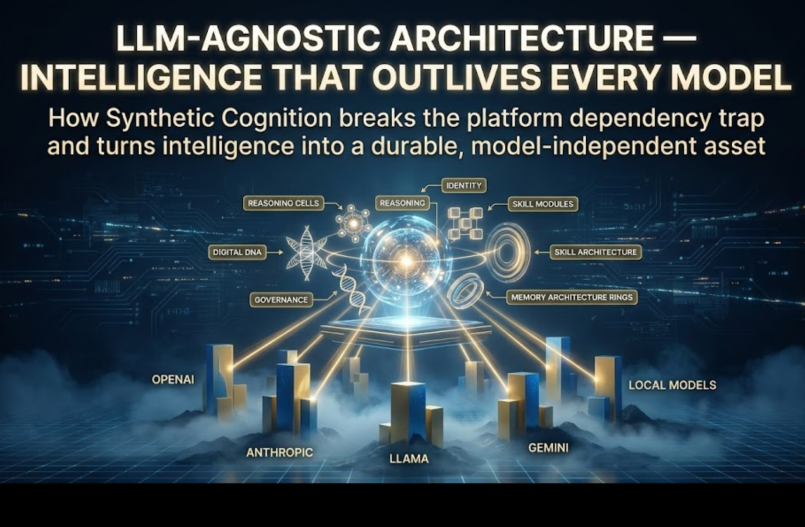

It is LLM-agnostic at the smallest possible unit of intelligence, which means every reasoning cell, every skill, and every persona can use the right model for the right task, and swap that model at any time, without breaking identity, memory, or behavior.

This is how intelligence becomes long-lived instead of short-lived. This is how personas outlive the platform they run on. This is how AI becomes future-proof by design.

Why Model Independence Is Essential

The LLM landscape evolves faster than any technology in modern history.

New models appear monthly.

Costs change weekly.

Capabilities shift unpredictably.

APIs break without warning.

Vendors retire products overnight.

Systems built on a single model inherit this volatility. Systems built on Synthetic Cognition do not. By design, LLM-agnostic architecture ensures:

- Identity is stored outside the model

- Memory lives independently from model context

- Reasoning cells can use any model, at any time

- Skills can blend multiple models simultaneously

- Fallback models can replace failure instantly

- New capabilities can be integrated without a rewrite

- The architecture remains stable across generations

This turns intelligence into an asset instead of a dependency.

How LLM Agnosticism Actually Works

Synthetic Cognition separates what a persona is from how it thinks. A persona’s Digital DNA stores:

- identity

- reasoning structure

- skill architecture

- memory system

- tonal and behavioral rules

- boundaries and constraints

None of this is held inside the LLM. The model becomes a tool, not the foundation. Every reasoning cell can independently specify:

- a primary model

- a fallback model

- a cheaper model for repetitive tasks

- a highly specialized model for domain work

- a local or self-hosted model for privacy

- a multimodal model for perception tasks

This means a single persona might simultaneously use:

- GPT for communication

- Claude for analysis

- Gemini for multimodal perception

- Llama for offline reasoning

- A fine-tuned model for compliance tasks

All at once.

All seamlessly.

All with clarity and control.

Why This Is Not “Model Switching”

Most platforms claim multi-model support, but rely on:

One model at a time. Swapping by hand. Switching, not architecture.

Synthetic Cognition is different. Model selection happens at the cellular level:

- each reasoning cell

- each step in a skill

- each perceptor

- each activator

- each memory operation

- each branch of a flow

Every component chooses its own model with intention. This allows precision engineering:

- The best model for reasoning

- The cheapest model for volume tasks

- compliant models for regulated industries

- local models for sensitive workflows

- specialized models for niche domains

It’s not a feature. It’s a philosophical shift.

The Persona Outlives the Model

Because a persona’s DNA, memory architecture, and decision structure live outside the LLM, personas remain stable even when models change. When a model is upgraded, the persona keeps:

- its identity

- its tone

- its reasoning style

- its memory

- its skills

- its priorities

- its boundaries

When a model disappears, the persona simply uses another. Traditional AI breaks. Synthetic Cognition adapts. This is the difference between intelligence as a product and intelligence as a platform.

Why This Matters for the Next Decade

Over the next several years, the model ecosystem will become:

- more fragmented

- more specialized

- more region-restricted

- more regulated

- more domain-specific

Any system that cannot fluidly adapt will collapse under this complexity. Synthetic Cognition is built for this world. The architecture guarantees:

- no vendor lock-in

- no forced migrations

- no system rewrites

- no dependency on one model

- no risk of losing persona continuity

- no disruption in reasoning or memory

This makes AI stable enough for real industries, not just demos.

What This Means for Enterprises

Enterprises face the highest stakes:

- compliance and governance

- data residency requirements

- multi-cloud mandates

- cost optimization

- system longevity

- cross-team interoperability

LLM-agnostic architecture solves these challenges by allowing:

- private on-prem or VPC models

- hybrid open + closed model stacks

- regulated model selection per workflow

- cost-controlled routing across tasks

- seamless failover when models shift

- multi-model orchestration inside one persona

This is the only practical path to real enterprise AI adoption.

Why This Architecture Will Outlive the Model Arms Race

Models will rise, fall, specialize, and fragment, but intelligence must remain stable. Synthetic Cognition separates the layers:

Models evolve.

Personas endure.

A persona becomes a structured identity that can plug into any model today, and any model tomorrow.

This is how intelligence becomes timeless.

Not tied to a single vendor.

Not trapped inside a single model.

Not vulnerable to platform churn.

A persona becomes a long-lived intelligence with lineage, memory, history, and structure that outlives every generation of LLMs. The intelligent future belongs to systems that can adapt without breaking. Synthetic Cognition was built for that future.